Reports Cases '07

Case studies have been performed for the following companies:

Back to 'introduction case studies'

Case from UOCG

students: Gjalt Bearda & Wim Ottjessupervisor: R. Smedinga

contact: L.A. van der Duim

The short description of this case is translating the homepage of the University Centre for Learning & Teaching (UCLT, in Dutch known as 'UOCG') into English. There was only a Dutch version of this homepage. The UCLT is already regionally and nationally the information and expert centre in its field, but aspires to have an international pioneer role. It is doing some projects in foreign countries, like Ghana, Macedonia and Uganda. So the need for a homepage in English was there. We, Gjalt and Wim, needed to make sure that there would come an English version of the homepage. First we talked with a few people from the UCLT to get an idea which parts of the homepage needed to be translated. We also talked with the Dutch student Johan Brondijk who currently is responsible for the Dutch homepage. We concluded that the best thing to do was translating everything except the information about the courses which are given in Dutch. The next task was the actual translation of the homepage. We had a lot of help from Aletta Kwant and Margreet van Koert, two students who did almost all the translation work. This was the part of the case which took the longest time. After the translation of several pages by one student, the other student verified the pages before sending the translation to us. We then looked at it and sent it to the UCLT where it was checked as well, because the use of technical terms made the translating sometimes hard. To simplify the translating we made a list of the technical terms. After this we published the translated text on the internet. In total there were around 180 A4 pages (!). It took around 25 weeks to translate it all, which means seven pages a week were translated and verified several times. When everything was translated and uploaded we checked the whole homepage for missing parts and bad translations. We would like to thank Johan Brondijk, Aletta Kwant and Margreet van Koert for all their work.

Case from Deloitte (I)

students: Else Starkenburg & Natasja Sterenborgcontact & supervisor: B. Reinink

Model Validation

Deloitte is an independent ‘member firm’ of the international Deloitte Touche Tohmatsu Verein. With approximately six thousand employees and holdings spread across the whole of the Netherlands, Deloitte is the largest organization in The Netherlands in the field of accountancy, tax advisement, consultancy and financial advisement. Deloitte also has a department responsible for the development of financial models. Our case study originates from this more math oriented department.

The purpose of our case was to program a Heston model in C#, to determine the price of an option. An option is the right to buy or sell a certain good against a pre-determined price, within a prior agreed period. In our case these goods were shares. To obtain the right to sell or buy a share for a certain price, the buyer pays a certain amount of money. The price of an option is a compensation for the financial risk the seller is subjected to, but on the other hand it has to be sufficiently low to interest buyers. A fair price is thus a balanced one, depending on the price the underlying share could adopt.

The already existing framework of our assignment at Deloitte, was a Black-Scholes Monte Carlo model. This model is based on the Black and Scholes option valuation formula, which gives an analytical approach of the option price. Using the Monte Carlo simulation, a large number of different paths are simulated for the different prices of the underlying share. Eventually the mean of these paths is used. In the Black-Scholes approach the standard deviation in the price change of the share, the volatility, is considered constant. However, from the share market can be seen this assumption proves to be incorrect. Our assignment was therefore to extend the existing model to a model in which the volatility of the share is also stochastically modeled, in other words to create a “Heston model”.

For the implementation of our case, we had to learn a lot about the financial world and its underlying math. This was accompanied by a great share of programming. One enjoyable aspect of our case was the fact that we had a clearly bounded assignment. However, during this assignment we encountered some technical and non-technical obstacles. This varied from correctly reading in input values, to deciding upon the right formulas for the calibration of the model. All this made our case a challenging one, from which we learned a lot. In combination with pleasurable supervision from Deloitte, it all eventually led to a, for Deloitte, usable final product.

Case from Deloitte (II)

students: Bernadette Kruijver & Thijs Hollinkcontact & supervisor: B. Reinink

Hedge Funds Return

We have investigated the hedge fund universe supervised by Deloitte, the perfect chance for us, physicists, to meet the world of finance. Hedge funds are a relatively new way of investment. Recently they got a lot of attention in the general media. Hedge funds differ from traditional investments; they are closed to the general public. Instead, they are private and have a high investment threshold. Since they are private institutions, they are allowed to use investment instruments that are not available to more regulated investors, such as mutual funds. Among these instruments are short selling, leverage and more complex derivatives. This allows for investment strategies that are very different from traditional strategies.

Since hedge funds are private, they are not obliged to give inside information about their assets and results. To be able to investigate hedge funds, we therefore turned to so-called hedge fund indices. For this case study we investigated two benchmarks for the general hedge fund industry: an investable index (FTSE Hedge) and a non-investible index (HFRI). Both benchmarks are split into several subindices, based on investment strategy. Next, we have compared the investable index with benchmarks of traditional investments, like stock, bonds, commodities and real estate.

Indices are interesting to investors if they combine high results with a small standard deviation. While the mean monthly results of the investible FTSE Hedge index are always lower than that of the non-investible HFRI index, the standard deviation is in half of the cases smaller, leading to less risky investments. The results of the hedge fund indices do not follow a normal distribution, but a log normal distribution like the results of ordinary stock. Performing hypothesis testing indicates that FTSE and HFRI do not always come from the same source distribution, which means that they have a different constitution, however the FTSE indices all correlate with the HFRI indices.

Almost all FTSE hedge fund indices show a correlation with the stock indices Dow Jones and S&P 500, and no correlation with the Goldman Sachs commodity index, the Lehman Bond index and the NCREIF Real Estate Index. The final product of our case study is a comprehensive clear analysis of hedge fund performance, which is ready to be used by Deloitte.

Performing this analysis we had to read and learn a lot about statistics, finance and other things unfamiliar to physicist. Although it seemed unclear sometimes, we managed to get a good overview of this aspect of hedge funds. The project was performed mostly from Groningen, with two visits to the Deloitte office in Amsterdam, once to outline the project and once to collect data and discuss the progress.

Case from Microsoft

students: Leo van Kampenhout, Samuel Hoekman Turkesteen & Wicher Vissersupervisor: S. Acherop

contact: M. Vermeulen

Microsoft is a worldwide computersoftware producer best known from their operating systems: MS-DOS and Windows. Microsoft also produces officesoftware such as MS Office. Microsoft also provides students, teachers and employees of universities and graduate schools an easy and not so expensive way of using their server and developmentsoftware. This service is called Microsoft Development Network Academic Alliance (MSDNAA). The software from this service is provided by a web-based management system called e-academy License Management System (ELMS).

The purpose of our case was to perform a market research to investigate the penetration of MSDNAA-products (like windows) into the university and graduate market and to find out where improvement is possible. In this research we found several difficulties which will have to overcome in order to increase the penetration of MSDNAA-products. To overcome these problems we formulated recommendations. We presented this results and recommendations at Microsoft headquarters in Schiphol-Rijk.

We made contact with 25 universities and graduate school and we interviewed them with questions involving the implementation and the global use of ELMS. If any technical difficulty arose, we offered them a helping hand.

Of the questioned institutes only 44 percent indicated to be content with the use of MSDNAA/ELMS, four percent was unsatisfied and the latter 52 percent was conditional content.

One of the biggest problems was the setup- and maintenancedocumentation (38 percent). The existing documentation was te extensive or to classified to use. We suggest to supply institutes with more simple documentation published on some kind of website. Another problem was system management at institutes (32 percent). System management is another target then students. System management needs to bij motivated in order to implement ELMS. A big issue is always financial (15 percent) however this is more a problem for microsoft then it is for the institute. Payments of MSDNAA/ELMS subscriptions are always done by a financial administration, apart from the eductional administration which has the subscription. In most case payment is done later than contractual is agreed upon. We suggested some form of automatic renewal of contracts. In our research we found that the database Microsoft is using is not very reliable. Data was not up-to-date and the database was inconsistent. We suggested to check institutes on a yearly basis and to use one protocol (for example by using a webform) to fill in the database.

One not so enjoyable thing about the case was that the case was not very bounded. On the other hand was a quite different case, since we are science students and this is more in the marketing/economics field. We learned a couple of things about performing a realtime market research and formulating clear targets.

Case from WL Delft Hydraulics

students: Sietze van Buuren & Anisa Salomonssupervisor: A.E.P. Veldman

contact: S. de Kleermaeker

Two-phase air-water circuit

By Sietze van Buuren and Anisa SalomonsWL Delft Hydraulics presently has two experimental set ups to research air bubbles in water transport systems. They plan to build a third two-phase air-water circuit, the characteristics of the equipment of this set up has yet to be determined.

The circuit will be used to extend the one-phase ‘Wanda’ software, that WL Delft Hydraulics have developed, to two-phases. This case study investigates which recent research has been done on air-water two-phase flow. In particular which flow regimes can be generated, under which circumstances and when flow regime transitions occur. There are mainly three flow patterns: bubble flow, slug flow and stratified flow. Bubble flow consists of spherical air bubbles evenly dispersed in the water. Slug flow is characterized by one large air bubble, almost the size of the tube diameter, followed by a froth of smaller bubbles. Finally, stratified flow is characterized by the two phases flowing parallel to each other in the tube. The division in three flow patterns is not widely accepted, there are many flow patterns in between these three and there is not one standard to distinguish between the flow patterns. This field of study has due to its complex nature, long been dominated by empirical studies into these flow patterns. The different flow patterns were distinguished by human observations, lately it has been possible to measure the bubble size and to distinguish flow regimes in a more consistent manner. The research on two-fluid models is still ongoing, since the seminal work of Taitel and Dukler in 1976. The research is shifting from experimental correlations between water velocity, air bubble velocity, tube diameter, pressure and the flow regime, to models and computational fluid dynamics models. The report gives an overview of the most relevant recent articles on two-phase flow, focused on the pressure gradient and regime transitions. The two-phase flow is very complex and difficult to model. There is not one model that captures all aspects, but each model has its own range in which it performs best. As we are not experts in this field, we have not made a choice between the various models. The report gives an overview of the most important articles on the subject.

Effectiveness of a pump without moving parts.

By Sietze van BuurenIn the second part of the case the goal was to simulate a motionless water pump and determine its effectiveness.

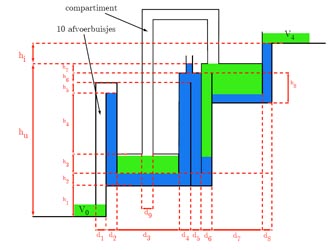

Paul de Vries, an employee at Corus, came up with the idea to pump water (or another liquid) to a higher level using compression. With use of a siphon, this compression is built up by a part of the water which is diverted to a lower level. In the figure below one can find the schematic layout of the pump. This idea was brought to WL Delft Hydraulics. They thought it would be useful for educational purposes and are planning to make a demo model. For this, a study was needed in order to find the optimal parameters of the pump.

|

| Fig 1: A schematic layout of the pump |

Because there was a limited amount of time for this part of the case (already two weeks were spent on a literature study of two-phase flow), a simple theoretical model was conceived. In this model, friction was not taken into account and due to the shortage of time robustness and physical correctness have not been looked into. However, still some preliminary studies on the effectiveness could be performed. For the parameters of the pump proposed by the inventor, the effectiveness turned out to be around ten percent, which is about 25 percent lower than the originally expected effectiveness.

It is very likely that there is a more optimal set of parameters. Furthermore, friction will influence the effectiveness in a negative way, while inertia can influence the effectiveness in a positive way. Before constructing the demo model more research is needed. Friction as well as inertia have to be included in the model after which an extensive parameter study is to be performed.

Case from UB

students: Stefan Postemasupervisor: S. Achterop

contact: H. Ellerman

My case study was for the library of the university (UB). They would like to have a implementation of the SRU/SRW protocol. This protocol is a standard for transfering information from the so called repositories to the outside world. SRU stands for Search and Retrieve via URL and SRW stands for Search and Retrieve Webservice(s). These repositories contain data that not only the university collects (books, etc) but also for instance a museum. Then via the web you can use a standard protocol to retrieve the data and implement your own way how you would like it to be presented.

As the definition says, you perform a search via the URL you are using and you'll get a response from the server in XML. This response is according to a certain syntax.

The UB already uses something like SRU/SRW that is called Open Archives Initiative (OAI). But if SRU/SRW is implemented completely, it is much more powerful than OAI.

The search query isn't passed on via normal SQL syntax but via CQL, Common Query Language. It is much more readable and easier to understand by humans. The disadvantage is that you would have to build an interpreter to map the CQL commands onto your own database (e.g. MySQL). That is mostly the hardest part. But SRU/SRW is becoming more popular and more libraries support this service. Maybe one day it will become a standard in retrieving data from libraries, that the CQL syntax will become standard in widely used database engines.

On the way, it was certain that the job was too big for one person to do in three weeks, but a beginning of the implementation was done and demonstrated that it worked. It didn't support the CQL but it did deliver the results in XML. The search was simulated using SQL queries.

Case from NAM

students: Jan Smit & Duurt Johan van der Hoeksupervisor: A.E.P. Veldman

contact: R. Broos & W. Sturm

The gas flow through pipelines in the North Sea is constantly monitored by NAM. In order to better understand and predict what is happening inside these pipelines the Lagosa model was designed. Using an Excel tool called the Disaster Recovery Tool, certain periods of time are simulated with Lagosa. Before starting the simulation it is necessary to generate a list of times where some events, the launches of so-called pigs (objects that clean the pipelines), have to be added manually. This is a time consuming exercise, if done manually, so an Excel Visual Basic application was designed to do this automatically.

Furthermore, it is interesting to compare simulation results with actual measurements. The remainder of the case was spent to give a nice presentation of this comparison. More VBA subroutines were designed to generate graphs and also to correct some measurement data. This last part was somewhat tricky. The 'pigs' causes large surges of fluids (called slugs) to arrive at the end of the pipeline in the so-called slug catcher. The out-flowing liquids are partially water and partially oil-like substances. Since this mixture has time to settle into layers and the draining is from below, water flows out first and then the oil-like substances. The continuous measurements done to determine the fluid level in the slug catcher, however, assume a uniform density and therefore give a wrong level indication. More VBA subroutines were used to present corrected fluid levels. The general solution was quite complicated, since quite a few anomalies in the data could occur and the program is supposed to handle all of these anomalies correctly. After this data correction a subroutine makes graphs that plot similar data in the same graph. This simplifies checking whether the Lagosa tool runs correctly and can help to upgrade the Lagosa model.

Case from RSP Technology

students: Anna Dinklasupervisor: J. T. M. de Hosson

contact: A. Bosch

RSP Technology is a Dutch company that produces aluminum alloys for the automotive-, and recently also optical industry. RSP produces the alloys through the process of rapid solification, hence the name RSP. In this process, also called meltspinning, aluminum and additional alloying elements are molten at a temperature of about 850 degrees Celsius. In this liquid phase the mixture hits a fast rotating copper wheel, which almost instantaneously releases a continuous metal ribbon at room temperature that will be chopped into flakes. After a couple more production steps (like compacting of the flakes and pressing to produce a billet), the billet is extruded into a profile.

For the production of the RSA-6061 T6 alloy, the profile will undergo a T6 heat treatment. This alloy can be made into high quality mirror surfaces with very low roughness of 1-5 nm (conventional AA6061 has a roughness Ra of 5-10 nm).

For optical applications like mirrors, a fine microstructure of the rapid soldified alloy is of great importance. However, since this alloy is produced only by RSP Technology, not much is known of its microstructure. Specific details of the microstructure, found with light microscope (LM) investigation might influence optical characteristics. The composition and shape of typical features must be determined. Having this information might bring forward a solution to improve the alloy. For this research, RSP has requested if a student could investigate the microstructure of this alloy. Therefore, analysis was performed using the LM and the scanning electron microscope (SEM). The latter gave us the opportunity to apply EDX (Energy Dispersive X-Ray) and OIM (Orientation Imaging Microscopy), two techniques that give information about the elements in a qualitative and quantitative way and about grain size and the distribution of existing phases.

In EDX analysis an electron beam strikes the surface of a conducting sample. This causes X-rays to be emitted from the point of the material. The energy of the X-rays emitted depend on the material under examination, so a spectrum of the elements present can be created. Also, a topographical image of the distribution of the elements is made.

The other technique, OIM (Orientation Imaging Microscopy) is based on automatic indexing of electron backscatter diffraction patterns (EBSP). OIM provides a complete description of the crystallographic orientations in polycrystalline materials.

For good OIM patters, we needed to try different methods of polishing the material. The best method turned out to be precision ion polishing (PIP). Unfortunately this was a time-consuming method. Since I had never worked with these microscopes before, it was quite a lot of work to do the analysis, but I think I learned a lot from it. I got the responsibility to do this project on my own and work with these machines. I hope I helped RSP Technology to get more insight in and to optimize their product. Finally, I would like to thank Gert ten Brink and prof. dr. De Hosson of the Material Science department for taking the time to teach me how to use the equipment, letting me use it and helping me throughout the project.

Case from Hi Light

students: Pim Lubberdinksupervisor: M. Vencelj & H. J. Wortche

contact: H. Koopmans

In nuclear physics, signal analysis mainly involves analyzing pulses. This is in contrast to, for example telecommunication, where the main focus is on periodic signals. Although the latter has greatly gained from the emergence of digitizers, the analyzing methods for digital signals used in nuclear physics are very often literal copies of their analog predecessors, which do often not fully use the possibilities of digitization.

A specific problem where the current state of the art for digital pulse analysis can be dramatically improved, is the problem of determining the arrival time of pulses. This report will describe the results of a case study on timing of digitized pulses, performed for Hi-Light Opto Electronics in cooperation with the KVI.

One of the practical applications where timing of digitized pulses plays a crucial role is in the Positron Emission Tomography (PET) scanner, used in hospitals to create 3D images of patients. Although the results of the research aren't limited to the PET scan, this application can be used as a good illustration.

To make a PET scan, the patients gets an injection of a radioactive isotope which decays by positron emmission (beta decay). The radioactive isotope is incorporated in a metabolically active molecule (glucose or water for example), so that it will form concentrations in the region of interest. When a positron is emitted, it will almost immediately annihilate with an electron, creating a photon pair (emitted at the same instant). Since the positron and electron are at rest during annihilation, conservation of momentum requires that the two photons travel in exact opposite directions (180 degrees). These photon pairs can be detected by a combination of a scintillator crystal and a photomultiplier (PMT). A PET scanner consists of a lot of these scintillator / PMT combinations, surrounding the patient.

Current PET scanners have a time resolution of about twenty ns (six meters) for each coincidence measurement. Since the resolution is so large, this means that for a given coincidence event, the PET can only distinguish on which line (connecting two PMTs) the source of radiation is. By using intersections of these lines from different coincidence events, a 3D image can be generated. The time resolution in this case requires that the patient gets a fairly large amounts of the radioactive tracer molecule and has to lay still in the scanner for about an hour.

With smaller time resolutions, one can use the time difference between the two photons created by the annihilation of the positron to determine where on the line connecting two PMTs the pair production took place. This technique is known as time of flight. By improving the time resolution of the timing of digitized pulses created by the scintillator PMT combination, time of flight calculations can be done. Improving the time resolution will reduce the amount of tracer necessary and will decrease the time the patient has to be in the scanner to a few minutes, clearly improving the comfort for the patient.

Although the concept of time of flight has been around for a while, currently only one commercial PET scanner (Philips Gemini TF) has a time resolution good enough to be able to use this idea. However, most of the research is currently concentrated on improving the scintillator crystals. This is also the case for the Gemini TF. To improve timing of digitized pulses, we use a different approach, concentrating on fast digitizing and numerical methods. With our approach we can reach time resolutions as small as 300 ps (nine cm), with a sub-optimal PMT / scintillator crystal combination. Obviously a great improvement as compared to the six meters resolution of conventional PET scanners. We believe we can get even better resolutions when applying our methods to crystals and PMTs which are used in the medical industry today.

Case from Thales

students: Fabio Bracci, Ruben van der Hulst, Anneke Praagman & Gijs Noorlandersupervisor: S. Achterop & J. den Hartog

contact: J. Troost

THALES Nederland

THALES is a global defence contractor employing 61,000 people in fifty locations around the world with sales in excess of ten billion euros a year. THALES Netherlands is a subsidiary of THALES and a market leader in military communications and radar technology. Located in Hengelo, THALES Naval Netherlands is the largest defence company in The Netherlands and concentrates on naval systems such as command and control, communications and radar technology. Examples of products manufactured at THALES Hengelo are the multi function radar APAR and the naval self defence system goal keeper. THALES employs about 2,000 people in the Netherlands. Next to naval systems activities in The Netherlands include , communications, optronics, munitronics, cryogenics and security.

The case-study-assignment

By Ruben and GijsFor the THALES Naval Netherlands, Concept Development & Experimentation laboratory, we were asked to investigate several techniques used in VoIP (Voice-over-IP, or in other words telephony over the internet). One of the problems in traditionally telephony is the existence of a central server and the centralized management of the used telephony numbers. This design makes maintenance a lot easier, but it also introduces a single point of failure. If such a telephone exchange station is shutdown, no communication is possible. One way to overcome this problem is to list all telephone-extensions on all local exchanges, so people can still reach each other, even when a part of the network is shutdown. However this imposes a lot of maintenance when extensions would be added or changed. This problem can be handled by a protocol called DUNDi. (Distributed Universal Number Discovery) In short, it is like asking your neighboring peer whether he knows how to reach a certain phone-extension or VoIP client. Some sort of peer-to-peer phonebook. We also described, and tested, a lot of other techniques involved with VoIP and Asterisk, an open-source implementation of a PBX (Private Branch eXchange – a telephone-exchange)

By Fabio & AnnekeBesides the task above, there are problems with communications between systems that use different protocols. A widely used protocol is SIP which is used in many popular VoIP services; another protocol is H.323. Our task was to find out how to manage clients each using one of these two protocols from a VoIP software telephone exchange. The H.323 standard is part of the H.32x family of real-time communication protocols, developed under the auspices of the ITU-T (International Telecommunication Union - Telecommunication Standardization Sector). Each protocol in the family addresses a different underlying network architecture e.g. a circuit switched network, B-ISDN, LAN with QoS, and LAN without QoS (H.323). H.323 is not an individual protocol but rather a complete, vertically-integrated suite of protocols that defines every component of a conferencing network: terminals, gateways, gatekeepers, MCUs and other feature servers. This is all in contrast to the Session Initiation Protocol (SIP), a simple protocol from the Internet Engineering Task Force (IETF), that specifies only what it needs to. SIP does not know about the details of a session, it just initiates, terminates and modifies sessions. SIP is a request-response protocol that closely resembles two other Internet protocols, HTTP and SMTP. Using SIP, telephony becomes another web application and integrates easily into other Internet services. In addition to this, we had to implement a connection of such a software telephone exchange to the regular telephone network. Another problem is the manageability of the software exchange. E.g. how to handle upgrades while keeping the same telephone network configuration.