Reports Cases '01

In April 2001 the committee Nippon '01 organized a trip to Japan. The following companies provided a Case Study for that study tour. The reports of these casestudies can be read by clicking on the names of the companies. They give a good impression of the possibilities, but companies are of course always welcome to come with other proposals.

- SKF

- NAM (I)

- NAM (II)

- RuG - Faculty of Medical Sciences (I)

- RuG - Faculty of Medical Sciences (II)

- ICT

- Gasunie (I)

- Gasunie (II)

- Mount Everest

- Océ

Back to 'introduction Case Studies'

Case from SKF

students: Alexander Raaijmakers, Ivo van der Werff

contact: Dr. E. Vegter

supervisor: Prof. Dr. J.Th.M. de Hosson

|

| Figure 1 |

Introduction

This case study was done for SKF, a Swedish company that has specialised in producing high quality ball bearings. Bearings consist of an outer cylinder, some rolling marbles and an inner cylinder. This inner cylinder endures most of the loads and forces and is therefore most likely to fail.

SKF has done research to increase the lifetime of rollers. Some mathematical models have been constructed, which contain various parameters. Two of these parameters are the diameter and concentration of certain carbon impurities, called e-carbides. The objective of this study was to determine these parameters for a number of steels, using electron microscopy.

Methods and Materials

Electron microscopy is based on exposing the material to an electron beam. SEM (Scanning Electron Microscopy) gathers images by scanning the electrons that are scattered on the material, TEM (Transmission Electron Microscopy) gathers images by scanning the electrons that pass through the material.

E-Carbides are tiny carbon impurities with a size of a few nanometers and a hexagonal structure. These e-carbides would therefore only be visible by TEM, which is capable of very high resolutions. They could also be recognised by their diffraction pattern, due to the hexagonal structure. SEM images give less detail, but are useful in studying the general structure of the material.

Results

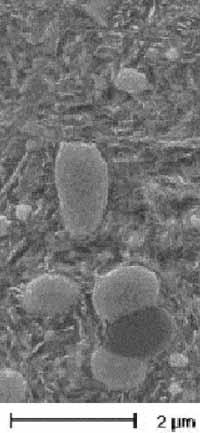

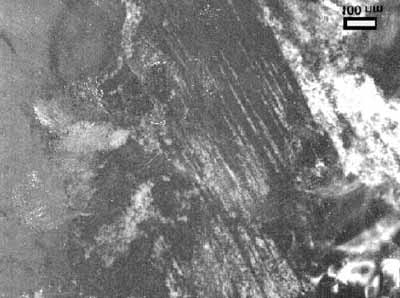

The SEM images gave useful information. The materials consisted of a martensite structure containing spherical carbon impurities. EDS (Energy Dispersive Spectroscopy) and diffraction patterns showed that these consisted of (Fe,Cr)7C3. (Fig. 1)

TEM analysis revealed a number of different carbide structures. However, none of them could be identified as e-carbide. The most interesting result was a carbide presence between the laths of the martensite. (Fig. 2). The light and dark lamellas are caused by the alternating orientation of the martensite matrix. On the boundary between them, small deposits of cementite have been found. Also, some Moiré patterns were observed by TEM, all aligned in the same direction. These indicate presence of precipitates in the martensite matrix, all sharing the same orientation-relation to the surrounding martensite matrix.

Conclusion

In none of the materials e-carbides have been identified. However, several other carbides have been observed. Moiré patterns give indirect evidence of the presence of precipitates having a distinct orientation with respect to the martensite matrix. The most striking result is the presence of cementite between the lamellas of the martensite. More research would be necessary to learn about the details of these structures.

Figure 2

Case from NAM (I)

students: Niels Maneschijn, Hugo Buddelmeijer

contact: W.M. van Gestel

Mission goals

To learn from previous incidents the Materials and Corrosion Engineering division from NAM (Nederlandse Aardolie Maatschappij) has numerous failure reports on accidents caused by bad materials engineering. A small number of these reports has been indexed using an Access database. In order to be of any use it is necessary to enter all reports in the database. This was the primary goal of our case study. Before this task was performed we were asked to review the database structure. A secondary goal was to make the database system more user friendly, and to add some extra functionality, such as a search tool.

Accomplishments

Our reviewing of the database system learned that there was no point in not keeping the existing Microsoft Access database, considering the fact that NAM and Shell are using the Microsoft Office software worldwide, our employers' previous experience with Access and the useful design of the existing database. Therefore the database was fed with various information from the failure reports, like materials involved, temperatures, pressures, process environment and other relevant information. After processing all failure reports and getting some more acquaintance with our subject we were able to polish a few details in the database design, such as the relations between tables, and to divide the materials in different categories. With these changes we were able to create a search form which is user friendly and allows the end user to search for ranges specified by the user. Also the input form was slightly redesigned, protecting the user from nonsensical input and making entering new information more easy.

Case from NAM (II)

students: Jisk Attema, Evert-Jan Borkent

contact: Rob Jansen

During this case study at the Nederlandse Aardolie Maatschappij BV (NAM) a test unit at the Underground Gas Supply (UGS) in Norg was examined. The UGS is used for the storage of gas from the small gasfield throughout the Netherlands. The oversupply of gas during summer months is pumped into the ground under a layer of salt. This gas can be used on cold winter days when the capacity of the regular network is not sufficient. Part of the UGS is a test unit that can test production from a well. The well is tested on its flow-rate, gas composition and gas-liquid ratio (the well produces a mix of gas, condensate and water). The capacity of the production line of the UGS is recently enlarged while the test unit is designed for the former capacity. The aim of this case study is to determine if the test unit can handle the current production capacity and if not, what the bottleneck of the system is and how this problem can be solved. The system consists of piping, valves, coolers and a gas-liquid separator. By looking up the specifications of all the equipment and calculating the effect on the pressure, temperature, speed and density of the gas at the increased capacity, the maximum capacity of all the different parts of the test unit can be determined. It was found that the only bottleneck was the gas-liquid separation vessel, as at high flow-rates the liquid and gas are not separated. Separating the flow and the gas-liquid ratio measurement can solve this bottleneck without any investments. Another problem was an orifice plate that measured the flow, because it was unclear if this plate would measure the flow accurate enough at higher flow-rates. This last problem still has to be studied further. All the other equipment can handle the increased gas-flow at the proposed production capacity. Therefore it can be concluded that the capacity of the test unit is high enough to test the wells at the current production capacity.

Case from RuG - Faculty of Medical Sciences (I)

students: Phebo Wibbens, Eelco Heerschop

contact: Prof. Dr. R.J. Vonk

supervisors: Prof. Dr. H. Duifhuis, Dr. H. Hasper

Our objective was to design a Glucose-Insulin regulation model. The regulation of glucose is not a very difficult process. The whole process could be described with a couple of linear equations. Unfortunately, the concentration of glucose is influenced by many different factors. All these regulative factors influence each other, by this there arise multiple feedback-loops making an actual implementation of the model a lot more difficult. For the implementation of a glucose-insulin regulation model we need suitable software. In the past implementing a glucose-insulin model in the programming language Pascal did some work. Nowadays we have graphical modelling tools at our disposal. By using these tools, an implementation of a model is easier to perform. Moreover the models are more flexible to use and maintain. Components of a model can easily be used in another model. One of the most powerful tools available is Simulink. Simulink is part of the mathematical program Matlab. Design and implementation is relatively easy, although it requires some mathematical knowledge of the users. After a successful implementation a graphical user interface can be build on top of the model, which makes it possible to use the model without having any mathematical knowledge.

Case from RuG - Faculty of Medical Sciences (II)

students: Jur van den Berg, Barend van de Wal

contact: Prof. Dr. R.J. Vonk

"From heaps of data to biological understanding"

At the medical faculty of the University of Groningen, especially at the nutrition department, a lot of work is being done with mass spectrometers. A mass spectrometer is a machine that produces a mass spectrum after input of biological material, for example a liver cell. Such a mass spectrum (figure 1) indicates the intensities of certain molecule weights that can be found in the biological material. In the field of proteomics the intensity of specific proteins is of particular interest.

Figure 1

A mass spectrum may have remarkable peaks at some positions (figure 2). These can be indicators of diseases, but it is also possible that it indicates whether or not the test person has eaten.

Figure 2

Until now, such mass spectra were rarely available, but with the advent of a new generation mass spectrometers it has become possible to generate huge amounts of data in a very short time. This has lead to the necessity of effective data storage. The question is how we want to use this data in the future for diagnostics or other analysis, because this specifies how the data has to be stored and therefore has a huge influence on the database design.

Often, biological data is much more complex than other data. Because it now concerns huge amounts of complex data, the question how to handle this data is more relevant than ever.

Our assignment concerned the progress of others in the field of data management in relation to biology. Furthermore, we had to explore the market of this research area.

Our first results showed that there was appropriate information available for our purpose, because our application was too specific. It concerned a specific area of mass spectrometry and a specific mass spectrometer. Therefore, our assignment became a little different. We now had to design a database for the storage of the data from the mass spectrometer by ourselves. It is of great importance to know beforehand what parameters had to be added as fields in the database. The experience of the contact person showed us that if one has to change the database architecture after a while, it is better to start all over again. While working on this case it was very important to maintain communication with our contact person, because of our unfamiliarity with concepts like 'pH-value' or 'electron ionizing voltage'.

Case from ICT

students: Silvie Schoenmaker, Jan van der Ven

contact: F. de Roo

supervisor: Prof. Dr. L. Spaanenburg

ICT Groningen has supplied Nippon '01 with a case concerning the standard IEEE 1394, better known as FireWire. They were interested in the use of FireWire as a basis for networking. Research in the area of connection speeds was to be done. The RuG supplied two computers and a FireWire cable and ICT the two FireWire cards, PCI IEEE 1394 from e-tech. The computers were a Pentium II of 300 MHz, with 128 MB of internal memory and a Pentium 150 Mhz, with 32 MB of internal memory. Windows ME was installed on both computers.

In order to start the research a program was needed that could send packages of bytes to and fro and show the time taken. Before even thinking of a program that would be able to do this a layer was needed that would be able to establish communication between the two computers. FireNet 2.0 was used to achieve this. This is an evaluation version that can be found on the internet. Microsoft Visual C++ 5.0 was used to create a program. This program was based on an example program Chatter in which a few changes and extensions were made. A command line interpreter for the sending of packages was inserted. Typing @100@10@ would send a pack age of 100 bytes 10 times. After finishing the program testing could be started. By saving the information gained in text files a comparison could easily be made.

The FireWire cards are made to achieve maximum speeds of up to 400 Mbit/s. According to the benchmarks in the documentation that came with FireNet speeds of up to 160 Mbit/s were attained. However, the speeds realized in the test situation of the given set-up were much slower. Server to server reached a speed of 70 Mbit/s, client to client 25 Mbit/s, client to server 14 Mbit/s and server to client 23 Mbit/s. Furthermore, a couple of other tests were taken, namely filetransfer from the client (15 Mbit/s) filetransfer from server (18 Mbit/s) and live video transfer (30 Mbit/s). This last test was from a device to the computer, which is the normal way of using of FireWire.

There are several reasons why these speeds were so much slower. The TCP/IP protocol is reliable, but not efficient. The packages were sent with the use of this protocol and as a result a large amount of overhead was created that could have slowed down the speed. Another problem might have been the reliability of Windows ME; according to the documentation, a bottleneck in datatransfer could show up when using windows 95/98/ME. Finally, using two different computers with different hardware profiles might have slowed down traffic a lot as well.

Coming to a final conclusion, the speed of the FireWire cable under these circumstances is not an improvement over other options. However, the situation might change and a faster alternative may emerge.

Case from Gasunie (I)

students: Stefan Wijnholds

contact: R. Abtroot, F.C. de Groot

supervisor: Dr. H. Hasper

The Gasunie is a company that trades and distributes fossil gas. The Gasunie also has a research department to develop new applications for as well as improvements on today's apparatuses and machines that use fossil gas. As a tool in these development processes, models are made of apparatuses and elements of machines. At the moment this is done by making a physical description of the apparatus. This normally takes a lot of time, while the manufacturer of the apparatus or machine has performed a series of tests on it and therefore has data on its input-output-characteristics. These can be used to make a predictor who can predict the output of the system in response to a given input. The aim was to find a way to make an accurate predictor for a multiple-input-multiple-output (MIMO) system using Matlab. Since manufacturers normally supply a set of step responses instead of a single more complex response, the information of a series of step responses had to be combined to obtain the overall characteristics of the system. Making a predictor based on one of the step responses and improving it by means of the other step responses did this. To facilitate this process a procedure was written in Matlab, which performs this job automatically. This procedure was tested on a mixing valve.

On Friday March 23rd the results were presented to the Gasunie by means of a presentation and a written report. Although some improvements can still be made, my supervisor at the Gasunie, Rob Aptroot, concluded that this procedure is very useful since it saves a lot of time compared to physically modelling a system.

Case from Gasunie (II)

students: Gijs de Vries

contact: F.C. de Groot

supervisor: Dr. H. Hasper

At the moment most of the arithmetic within the Gasunie is done with the assistance of MS-Excel. Because Excel has its restrictions, Gasunie wants to switch over to Matlab. To make this a fluent switch, a link had to be created between Matlab and the programs currently used by the Gasunie.

The case started with analysing the output of HPVEE. HPVEE is a program used by the Gasunie for collecting all kinds of data from their experiments. After talking to Mr. de Groot the final representation of the data in Matlab was determined. Then the design of the different macros could be done.

The first one was a Matlab macro that could be used for reading HPVEE data into Matlab. After some testing with this macro the structure could be finalised. When this was finished, a beginning was made with the design of the macros for Excel. Three macros were to be made. The two most important are the transfer macros. One for sending data to Matlab and one to retrieve data from Matlab. Besides those two, a small macro reformat of the data in Excel had to be made. This was needed because the date and the time where sometimes divided over two separate columns in Excel while it is just one field in the Matlab datastructure. With the aid of these macros, one can switch easily between Excel and the to be explored Matlab.

Next was to provide the Gasunie with all kinds of information about scientific visualisation and to write some example macros in Matlab that used the data. This way the users have some guidance on how to use the data. Finally there was a presentation about the case. Because the Gasunie is not really interested in the design of the macros the presentation was mostly aimed at the use of these macros and how to work with the data in Matlab. Mr de Groot was very content about the work despite the fact that some of the macros did not work with the current configuration of their systems. After a few hours of work by amongst others the system administrator it turned out to be a version problem. The testing machine that was used for the case had Windows 2000 as Operating System, while the desktop computers still use Windows 98. Since a software upgrade was due, this was not a problem. The people just have to wait a little longer before they can take advantage of this work.

Case from Mount Everest

students: Martijn Kuik, Arend Dijkstra

contact: Dr. V.J. de Jong

supervisor: Prof. Dr. L. Spaanenburg

Mount Everest is developing a course for the ICT Company CMG. In this course the subject "functional design" will be treated. In three parts of one week, experienced teachers will teach the students in the traditional way. Apart from this lecture-based approach, Mount Everest wants to teach the subject by modules over the Internet which can be used for studying at home. Between the regular lectures, students can look into parts of the subject by studying one of these modules.

Because the development of a complete online module is quite expensive and time-consuming it seems sensible to search the Internet for existing material. Parts of available courses can then be re-used by Mount Everest.

On the Internet a lot of information can be found. Especially universities provide a lot of background information about lectures on their sites, for example sheets and exercises. Apart from the traditional "at random" presentations some universities now use Blackboard technology to present information in an ordered way.

Unfortunately, it has not been possible to find a totally complete course. Although a large part of the content can be found, it seems necessary to write parts of the modules. Blackboard seems to be a good platform to present information, although it has not yet been fully developed.

Available information is getting more and more interactive, for example some books are nowadays accompanied by CD-ROMs or webpages. Interactive sources will get even more influence on the way information is spread, but they will not completely rule out the traditional lectures. Interactive modules should therefore be seen as an aid to these lectures.

Case from Océ

students: Frank Wilschut, Judith Brouwer

contact: T. van Dijk

supervisor: Dr. H. Hasper

Context

Océ Research is a part of the Océ Group. Its goal is to research new technology and to find new areas of applications for Océ. Within this framework research is done in the field of archive and retrieval technology.

Assignment

The assignment from Océ Research involved the study of the infrastructural possibilities and limitations Océ could encounter when constructing an archive service on the Internet. Such an archive service makes it possible for companies to create a digital archive without having to buy and construct the necessary infrastructure, hardware and software. Companies and their employees should be able to access this archive through one or more types of communication, such as Internet and mobile phone. The purpose of the assignment was to give an insight into the means of communications necessary to provide multiple companies and their employees with an archive service. The secondary goal of this study was to investigate other aspects, which could give a better understanding of the feasibility of such an e-archive.

Solution

The total system of the archive service (including users) consists of a number of components, which have to be connected by wired or wireless communication systems. The main component of the system is the archive service itself, which consists of a number of archives for every participating company, equipped with some service products like search engines. Attached to the archive service there is a backup service, which stores the information in the archives of the companies. The connection between the archive service and the backup service does not have to be as quick as the connection between the archive service and the companies. A daily back up will be sufficient. The companies can be divided into three components: the main station of the company itself, employees who work at home and agents who travel and visit clients of the companies. A possible participating company could be an assurance agency: some of the employees visit clients and have to get instantaneous access to the archive service every time, everywhere. It is also very likely that some of the employees work at home and there are people working at the office. The connection between these groups of users of one single participating company can be established in two different ways. Either only the office (main station) is connected directly to the archive service and the employees at home and the agents are connected to the office, or every user has his own connection to the archive service. This will make the infrastructure of the archive service more complicated (not only the number of companies is more than one, but also the number of connections per company). Besides this, there is more bandwidth needed. Therefore the archive system will be more expensive. In our case study we looked at different types of connections. Some connections have to be wireless (GSM, EGDE or even UMTS), which make them slower and more expensive. Wired connections (ISDN, ADSL or own lines) are much easier and safer. We compared the speed and the costs of the different connections. Also we looked at the possibility for Océ to cooperate with a third company like KPN to establish the connections between the archive service and the users. We gave an overview of the possibilities, but were not able to make a "best solution", because some parameters (number of participating companies, number of connections per company, amount of information to be transferred, etc) were unknown and hard to estimate.